Alpha Release

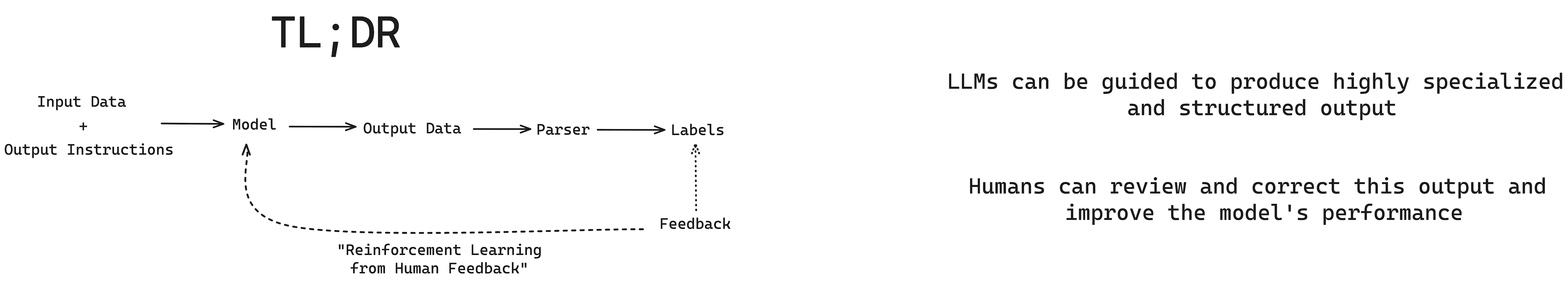

TLDR: Protege Engine

Protege Engine is an AI-driven system developed by Intuitive Systems, designed as a versatile drop-in replacement for Large Language Model Inference APIs like those provided by OpenAI.

It empowers users to create and integrate Large Language Model Inference and training functionality into their products with minimal engineering effort, thanks to its comprehensive RLHF interface and easy-to-use SDK.

By facilitating seamless integration into existing user interfaces, Protege Engine significantly reduces the Total Cost of Ownership (TCO) for AI pipelines. It enhances user outcomes in domain-specific contexts, making it an ideal solution for anyone looking to leverage advanced AI capabilities without the need for expensive and hard-to-find technical resources.

Things you need to know:

- Predictions: The process involving call and response with prompts and completions from the inference backend, where completions are parsed into labels for further applications, aiding in generating structured outputs.

- Label Parsers: Tools or mechanisms that take the output (completions) from the inference process and convert them into structured labels, facilitating the interpretation and use of AI-generated data within applications.

- Feedback Mechanism: A pivotal component where human interaction through the UI leads to the approval or correction of prediction labels, directly influencing the dataset preparation for further training and enhancing model accuracy over time.

- Inference Backends: The computational backend that performs the AI model's inference tasks. It serves as an instance equipped with an API endpoint for request proxying and execution tracing, crucial for generating predictions.

- Datasets: Collections of data compiled from various prompts essential for training models. These datasets can be synthetic or standard and are vital for replicating the behavior demonstrated in the prompts, ensuring the model's continuous learning and adaptation.